I have just finished my Honours degree and the topic of my thesis is "Random Access Rendering of Animated Vector Graphics Using GPU". It is based on previous work by Nehab & Hoppe. Full paper of my thesis is available here.

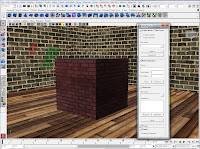

The novel approach to random access rendering introduced by Nehab & Hoppe is to preprocess the vector image, localize the data into cells, and build a fast look-up structure used for rendering. In short, it allows efficient texture mapping of vector images onto curved surfaces. However, their CPU localisation was way too slow to be used in real time with animated vector images.

The meaning of "random access" here is similar to when used in term random-access memory (RAM). It means that the color of the image can be queried at an arbitrary coordinate within the image space. Efficient random access to image data is essential for texture mapping, and has been traditionally associated with raster images.

The novel approach to random access rendering introduced by Nehab & Hoppe is to preprocess the vector image, localize the data into cells, and build a fast look-up structure used for rendering. In short, it allows efficient texture mapping of vector images onto curved surfaces. However, their CPU localisation was way too slow to be used in real time with animated vector images.

My goal was to improve their approach of vector image localisation by parallelizing the encoding algorithm and implementing it on the GPU to take full advantage of its parallel architecture, thus allowing application to animated images. In my thesis, I present an alternative look-up structure, more suitable to parallelism, and an efficient parallel encoding algorithm. I was able to achieve significant performance improvements in both encoding and rendering stages. In most cases, my rendering approach even outperforms the traditional forward rendering approach.