Over the last few years, while studying Games & Graphics Programming at RMIT, I was also working on my own game engine as one of my hobby projects. A complete game engine is a complex beast which includes many subsystems including rendering, animation, sound, physics, AI, resource management, exporting pipeline and more. Trying to implement everything from scratch would be simply a crazy idea, therefore I mostly focused on three objectives.

This demo is available for download here: http://www.ivanleben.com/Demo/EngineDemo.zip

Firstly, I wanted to implement a modern renderer with state-of-art visual effect. Secondly, I wanted to implement an animation system supporting both skeletal and cutscene (scene-wide) animations. Finally, I wanted to implement a content pipeline for models, animations and scenes (maps), to bring resources from content creation software such as Maya into the game engine.

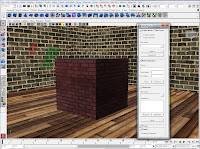

I have followed the trend of many other recent game engines and decided to implement deferred rendering, which allows me to use complex lighting scenarios with hundreds of lights and still render the scene efficiently. This approach also turned out to lend itself nicely to implementation of depth-of-field effect. I also implemented a High-Dynamic-Range lighting pipeline with tone mapping and "Bloom" effect.

I used Maya SDK to create a custom exporter tool. By using Maya's C++ API I was able to link the plugin executable directly to my engine library, allowing me to use the engine's serialization functions. My exporter was somewhat inspired by that of UnrealEd and allows separate exporting of static meshes, skeleton poses or character animations. However, since I wasn't going to develop my own editor software, I implemented additional exporting functions for scene-wide animations, allowing construction of cutscene sequences. The exporter encodes all these resources into package files which can be directly loaded by the engine.

I used Maya SDK to create a custom exporter tool. By using Maya's C++ API I was able to link the plugin executable directly to my engine library, allowing me to use the engine's serialization functions. My exporter was somewhat inspired by that of UnrealEd and allows separate exporting of static meshes, skeleton poses or character animations. However, since I wasn't going to develop my own editor software, I implemented additional exporting functions for scene-wide animations, allowing construction of cutscene sequences. The exporter encodes all these resources into package files which can be directly loaded by the engine.In 3rd year of uni I took the opportunity to use my game engine in the major project. I teamed up with a couple of digital artists and designers to produce a short demo in the form of an intro to what would be a game similar to Heavy Rain. We tried to use the features of the rendering engine creatively, such as utilizing the depth-of-field effect to steer the player's attention towards an interactive object.

In order to avoid combinatorial explosion in shading code arising from many different material and geometry types, I implemented an automatic shader compositor. It is designed as a data-driven graph system, where inter-connected processing nodes are defined by their input data, output data and shading code. Every asset in the pipeline can register its own shading nodes which get automatically inserted into the shading graph based on the flow of data through the network. The automatic shader compositor makes it easy to combine different types of materials with various number of input textures and shading modes such as diffuse map, normal map and cell shading, as well as additional geometry processing such as hardware skinning.

Overall, I am quite satisfied with the work I've done on the engine so far. I have achieved my goal of implementing some of the state-of-art visual effects as well as the supporting asset pipeline and learned a lot in the process. In the future, I might add more to it, either in the form of additional rendering effects or optimizations and improvement of existing techniques. In particular, I am planning to implement screen-space ambient occlusion and explore alternative shadowing approaches (simple shadow maps turn out quite bad more often than not).

Overall, I am quite satisfied with the work I've done on the engine so far. I have achieved my goal of implementing some of the state-of-art visual effects as well as the supporting asset pipeline and learned a lot in the process. In the future, I might add more to it, either in the form of additional rendering effects or optimizations and improvement of existing techniques. In particular, I am planning to implement screen-space ambient occlusion and explore alternative shadowing approaches (simple shadow maps turn out quite bad more often than not).

1 comment:

Darn, it seems like the domain you hosted the file on is down. Any chance you still have it?

Post a Comment